Kechen Song(Associate professor)

+

- Supervisor of Doctorate Candidates Supervisor of Master's Candidates

- Name (English):Kechen Song

- E-Mail:

- Education Level:With Certificate of Graduation for Doctorate Study

- Gender:Male

- Degree:博士

- Status:Employed

- Alma Mater:东北大学

- Teacher College:机械工程与自动化学院

- Email:

Click:

Open time:..

The Last Update Time:..

Robotic Visual Grasping Detection

Journal Papers(incomplete):

1. Ling Tong, Kechen Song*, Hongkun Tian, Yi Man, Yunhui Yan and Qinggang Meng. A Novel RGB-D Cross-Background Robot Grasp Detection Dataset and Background-Adaptive Grasping Network [J]. IEEE Transactions on Instrumentation & Measurement, 2024, 73, 9511615.

2. Hongkun Tian, Kechen Song*, Ling Tong, Yi Man and Yunhui Yan. Robot Unknown Objects Instance Segmentation based on Collaborative Weight Assignment RGB-Depth Fusion Strategy [J]. IEEE/ASME Transactions on Mechatronics, 2024, 29(3), 2032-2043.

3. Yunhui Yan, Hongkun Tian, Kechen Song*, Yuntian Li, Yi Man, Ling Tong. Transparent Object Depth Perception Network for Robotic Manipulation Based on Orientation-aware Guidance and Texture Enhancement [J]. IEEE Transactions on Instrumentation & Measurement, 2024, 73, 7505711.

4. Yunhui Yan, Ling Tong, Kechen Song*, Hongkun Tian, Yi Man, Wenkang Yang. SISG-Net: Simultaneous Instance Segmentation and Grasp Detection for Robot Grasp in Clutter [J]. Advanced Engineering Informatics, 2023, 58, 102189.

5. Hongkun Tian, Kechen Song*, Jing Xu, Shuai Ma, Yunhui Yan. Antipodal-Points-aware Dual-decoding Network for Robotic Visual Grasp Detection Oriented to Multi-object Clutter Scenes [J]. Expert Systems With Applications, 2023, 230, 120545.

6. Ling Tong, Kechen Song*, Hongkun Tian, Yi Man, Yunhui Yan, Qinggang Meng. SG-Grasp: Semantic Segmentation Guided Robotic Grasp Oriented to Weakly Textured Objects Based on Visual Perception Sensors [J]. IEEE Sensors Journal, 2023, 23(22), 28430-28441.

7. Hongkun Tian, Kechen Song*, Song Li, Shuai Ma, Jing Xu and Yunhui Yan. Data-driven Robotic Visual Grasping Detection for Unknown Objects A Problem-oriented Review [J]. Expert Systems With Applications, 2023, 211, 118624.

8. Hongkun Tian, Kechen Song*, Song Li, Shuai Ma, Yunhui Yan. Rotation Adaptive Grasping Estimation Network Oriented to Unknown Objects Based on Novel RGB-D Fusion Strategy [J]. Engineering Applications of Artificial Intelligence, 2023,120, 105842.

9. Hongkun Tian, Kechen Song*, Song Li, Shuai Ma and Yunhui Yan. Light-weight Pixel-wise Generative Robot Grasping Detection Based on RGB-D Dense Fusion [J]. IEEE Transactions on Instrumentation and Measurement, 2022,71, 5017912.

| RGB-D cross-background robot grasp detection dataset (CBRGD) | |

|

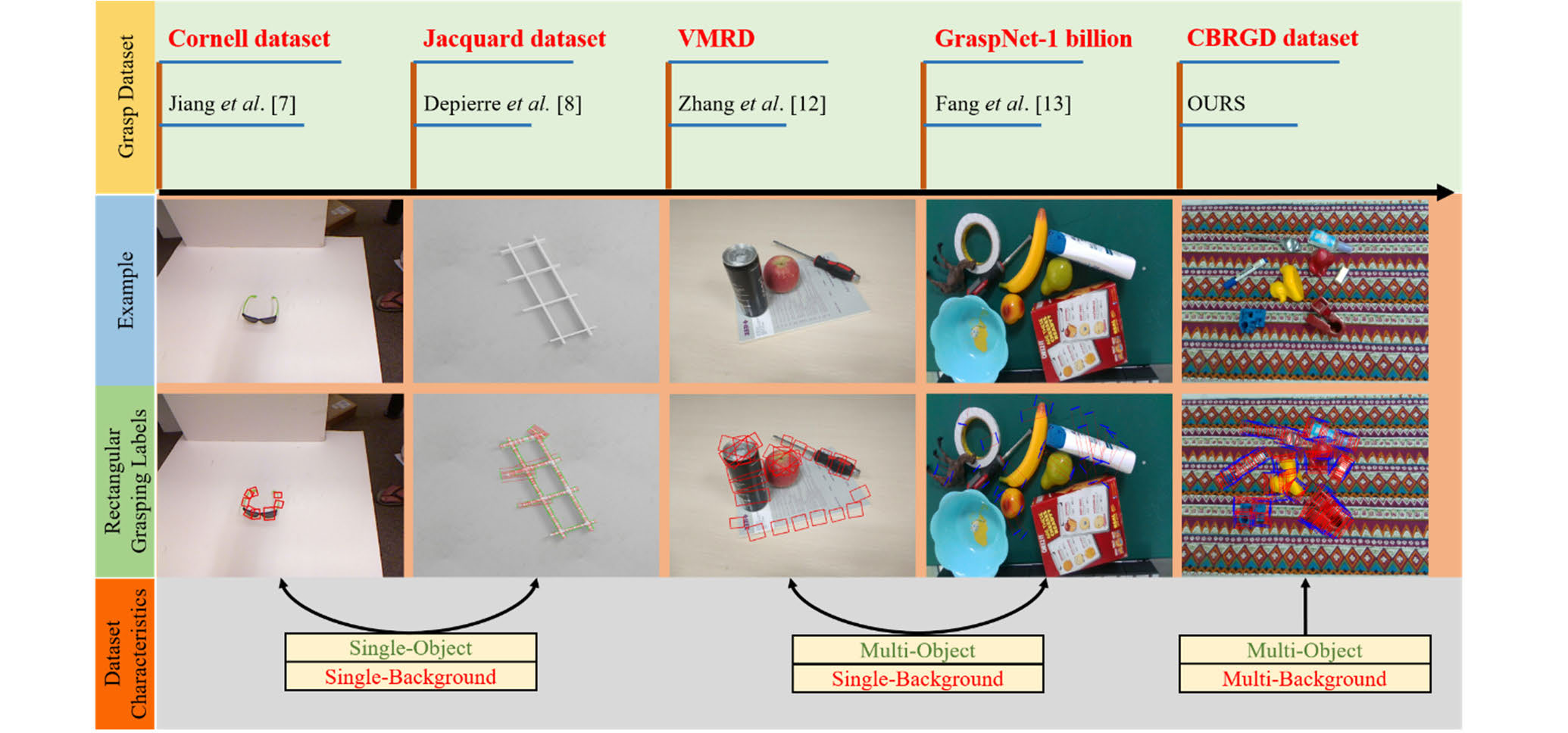

This paper presents a novel RGB-D cross-background robot grasp detection dataset (CBRGD). The dataset aims to enhance the performance of intelligent robots when confronted with diverse and dynamic scenarios. The CBRGD dataset consists of seven different common backgrounds and 58 common objects, covering various grasping scenarios. Alongside the dataset, we also propose a background-adaptive robot multiobject grasp detection network named BA-Grasp. Using the bimodal foreground activation strategy (BFAS), we can suppress background noise while highlighting the target object in the foreground. The multiscale foreground region features enhancement module (MFRFEM), on the other hand, allows the network to adapt to objects of different sizes while highlighting important areas in the foreground. Extensive experiments indicate that our robotic grasping method demonstrates significant advantages when facing various scenarios and complex, variable objects. The proposed method achieved the accuracies of 97.8% and 96.7% on the publicly available Cornell dataset and 94.6% on the Jacquard dataset, which are comparable to the SOTA method. Moreover, on the CBRGD dataset, the proposed method demonstrated an average accuracy improvement of 5%–10% over the SOTA method. Robot grasping experiments validate that our method can maintain high grasping accuracy in real-world applications. Experimental videos and the CBRGD dataset can be found at the following address: https://github.com/meiguiz/BA-Grasp. |

| Ling Tong, Kechen Song, Hongkun Tian, Yi Man, Yunhui Yan and Qinggang Meng. A Novel RGB-D Cross-Background Robot Grasp Detection Dataset and Background-Adaptive Grasping Network [J]. IEEE Transactions on Instrumentation & Measurement, 2024, 73, 9511615. (paper) (code) | |

| Robot Unknown Objects Instance Segmentation based on Collaborative Weight Assignment RGB-Depth Fusion Strategy | |

| This paper proposes a collaborative weight assignment (CWA) fusion strategy for fusing RGB and Depth (RGB-D). It contains three carefully designed modules, motivational pixel weight assignment(MPWA) module, dual-direction spatial weight assignment (DSWA) module, and stepwise global feature aggregation (SGFA) module. Our method aims to adaptively assign fusion weights between two modalities to exploit RGB-D features from multiple dimensions better. On the popular Graspnet-1Bilion and WISDOM RGB-D robot operation datasets, the proposed method achieves competitive performance with state-of-the-art techniques, proving our approach can make good use of the information between the two modalities. Furthermore, we have deployed the fusion model on the AUBO i5 robotic manipulation platform to test its segmentation and grasping optimization effects oriented to unknown objects. The proposed method achieves robust performance through qualitative and quantitative analysis experiments.. |  |

| Hongkun Tian, Kechen Song, Ling Tong, Yi Man and Yunhui Yan. Robot Unknown Objects Instance Segmentation based on Collaborative Weight Assignment RGB-Depth Fusion Strategy [J]. IEEE/ASME Transactions on Mechatronics, 2024, 29(3), 2032-2043. (paper) | |

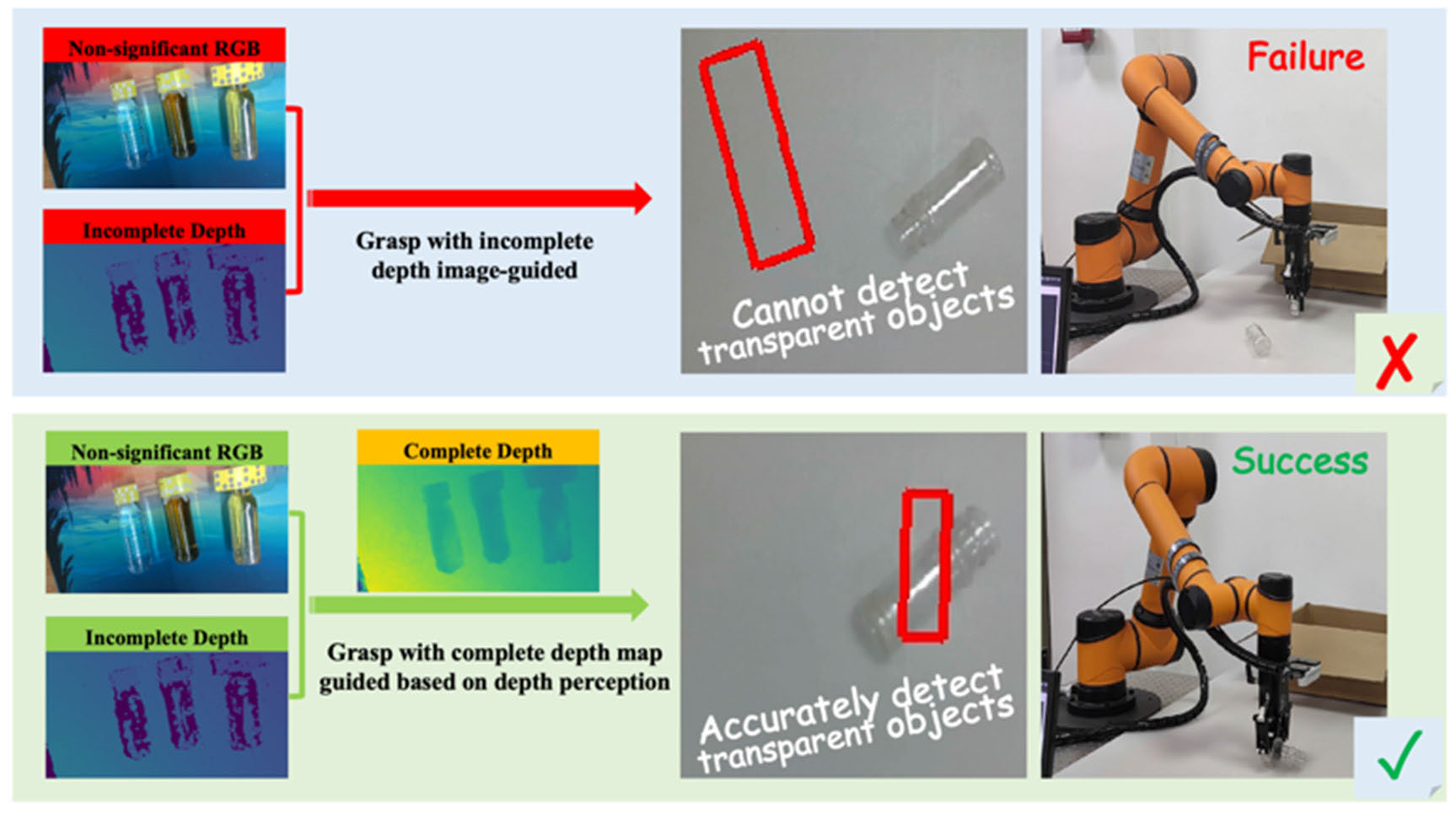

| Transparent Object Depth Perception Network for Robotic Manipulation Based on Orientation-Aware Guidance and Texture Enhancement | |

|

Industrial robots frequently encounter transparent objects in their work environments. Unlike conventional objects, transparent objects often lack distinct texture features in RGB images and result in incomplete and inaccurate depth images. This presents a significant challenge to robotic perception and operation. As a result, many studies have focused on reconstructing depth data by encoding and decoding RGB and depth information. However, current research faces two limitations: insufficiently addressing challenges posed by textureless transparent objects during the encoding-decoding process and inadequate emphasis on capturing shallow characteristics and cross-modal interaction of RGB-D bimodal data. To overcome these limitations, this study proposes a depth perception network based on orientation-aware guidance and texture enhancement for robots to perceive transparent objects. The backbone network incorporates an orientation-aware guidance module to integrate shallow RGB-D features, providing prior direction. In addition, this study designs a multibranch, multisensory field interactive texture nonlinear enhancement architecture, inspired by human vision, to tackle the challenges presented by textureless transparent objects. The proposed approach is extensively validated on both public datasets and industrial robotics platforms, demonstrating highly competitive performance. |

| Yunhui Yan, Hongkun Tian, Kechen Song, Yuntian Li, Yi Man, Ling Tong. Transparent Object Depth Perception Network for Robotic Manipulation Based on Orientation-aware Guidance and Texture Enhancement [J]. IEEE Transactions on Instrumentation & Measurement, 2024, 73, 7505711. (paper) | |

| SISG-Net: Simultaneous Instance Segmentation and Grasp Detection | |

Robots have always found it challenging to grasp in cluttered scenes because of the complex background information and changing operating environment. Therefore, in order to enable robots to perform multi-object grasping tasks in a wider range of application scenarios, such as object sorting on industrial production lines and object manipulation by home service robots, we innovatively integrated segmentation and grasp detection into the same framework, and designed a simultaneous instance segmentation and grasp detection network (SISG-Net). By using the network, robots can better interact with complex environments and perform grasp tasks. In order to solve the problem of insufficient fusion of the existing RGBD fusion strategy in the robot field, we propose a lightweight RGB-D fusion module called SMCF to make modal fusion more efficient. In order to solve the problem of inaccurate perception of small objects in different scenes, we propose the FFASP module. Finally, we use the AFF module to adaptively fuse multi-scale features. Segmentation can remove noise information from the background, enabling the robot to grasp in different backgrounds with robustness. Using the segmentation result, we refine grasp detection and find the best grasp pose for robot grasping in complex scenes. Our grasp detection model performs similarly to state-of-the-art grasp detection algorithms on the Cornell Dataset. Our model achieves state-of-the-art performance on the OCID Dataset. We show that the method is stable and robust in real-world grasping experiments. The code and video of our experiment used in this paper can be found at: https://github.com/meiguiz/SISG-Net |

|

| Yunhui Yan, Ling Tong, Kechen Song, Hongkun Tian, Yi Man, Wenkang Yang. SISG-Net: Simultaneous Instance Segmentation and Grasp Detection for Robot Grasp in Clutter [J]. Advanced Engineering Informatics, 2023, 58, 102189. (paper) (code) | |

| Antipodal-Points-aware Dual-decoding Network for Robotic Visual Grasp Detection Oriented to Multi-object Clutter Scenes | |

|

It is challenging for robots to detect grasps with high accuracy and efficiency-oriented to multi-object clutter scenes, especially scenes with objects of large-scale differences. Effective grasping representation, full utilization of data, and formulation of grasping strategies are critical to solving the problem. To this end, this paper proposes an antipodal-points grasping representation model. Based on this, the Antipodal-Points-aware Dual-decoding Network (APDNet) is presented for grasping detection in multi-object scenes. APDNet employs an encoding–decoding architecture. The shared encoding strategy based on an Adaptive Gated Fusion Module (AGFM) is proposed in the encoder to fuse RGB-D multimodal data. Two decoding branches, namely StartpointNet and EndpointNet, are presented to detect antipodal points. To better focus on objects at different scales in multi-object scenes, a global multi-view cumulative attention mechanism, called Global Accumulative Attention Mechanism (GAAM), is also designed in this paper for StartpointNet. The proposed method is comprehensively validated and compared using a public dataset and real robot platform. On the GraspNet-1Billion dataset, the proposed method achieves 30.7%, 26.4%, and 12.7% accuracy at a speed of 88.4 FPS for seen, unseen, and novel objects, respectively. On the AUBO robot platform, the detection and grasp success rates are 100.0% and 95.0% on single-object scenes and 97.0% and 90.3% on multi-object scenes, respectively. It is demonstrated that the proposed method exhibits state-of-the-art performance with well-balanced accuracy and efficiency. |

| Hongkun Tian, Kechen Song, Jing Xu, Shuai Ma, Yunhui Yan. Antipodal-Points-aware Dual-decoding Network for Robotic Visual Grasp Detection Oriented to Multi-object Clutter Scenes [J]. Expert Systems With Applications, 2023, 230, 120545. (paper) | |

| SG-Grasp: Semantic Segmentation Guided Robotic Grasp Oriented to Weakly Textured Objects Based on Visual Perception Sensors | |

| Weakly textured objects are frequently manipulated by industrial and domestic robots, and the most common two types are transparent and reflective objects; however, their unique visual properties present challenges even for advanced grasp detection algorithms. Many existing algorithms heavily rely on depth information, which is not accurately provided by ordinary red-green-blue and depth (RGB-D) sensors for transparent and reflective objects. To overcome this limitation, we propose an innovative solution that uses semantic segmentation to effectively segment weakly textured objects and guide grasp detection. By using only red-green-blue (RGB) images from RGB-D sensors, our segmentation algorithm (RTSegNet) achieves state-of-the-art performance on the newly proposed TROSD dataset. Importantly, our method enables robots to grasp transparent and reflective objects without requiring retraining of the grasp detection network (which is trained solely on the Cornell dataset). Real-world robot experiments demonstrate the robustness of our approach in grasping commonly encountered weakly textured objects; furthermore, results obtained from various datasets validate the effectiveness and robustness of our segmentation algorithm. Code and video are available at: https://github.com/meiguiz/SG-Grasp. |

|

| Ling Tong, Kechen Song, Hongkun Tian, Yi Man, Yunhui Yan, Qinggang Meng. SG-Grasp: Semantic Segmentation Guided Robotic Grasp Oriented to Weakly Textured Objects Based on Visual Perception Sensors [J]. IEEE Sensors Journal, 2023, 23(22), 28430-28441. (paper) (code) | |

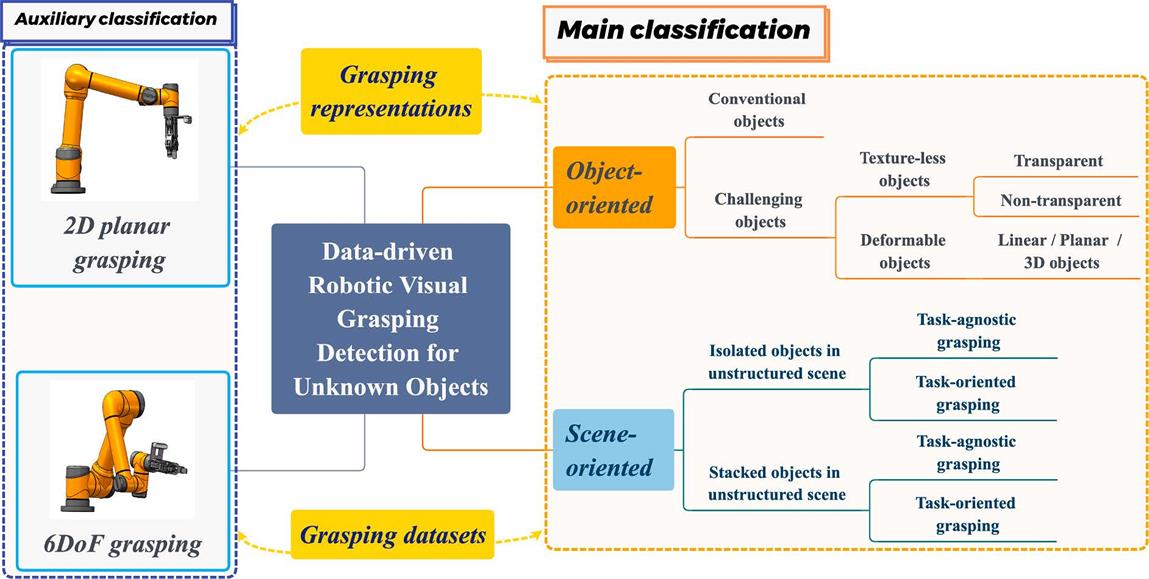

| Data-driven robotic visual grasping detection for unknown objects | |

|

This paper presents a comprehensive survey of data-driven robotic visual grasping detection (DRVGD) for unknown objects. We review both object-oriented and scene-oriented aspects, using the DRVGD for unknown objects as a guide. Object-oriented DRVGD aims for the physical information of unknown objects, such as shape, texture, and rigidity, which can classify objects into conventional or challenging objects. Scene-oriented DRVGD focuses on unstructured scenes, which are explored in two aspects based on the position relationships of objectto-object, grasping isolated or stacked objects in unstructured scenes. In addition, this paper provides a detailed review of associated grasping representations and datasets. Finally, the challenges of DRVGD and future directions are pointed out. |

| Hongkun Tian, Kechen Song, et al. Data-driven Robotic Visual Grasping Detection for Unknown Objects A Problem-oriented Review [J]. Expert Systems With Applications, 2023, 211, 118624. (paper) | |

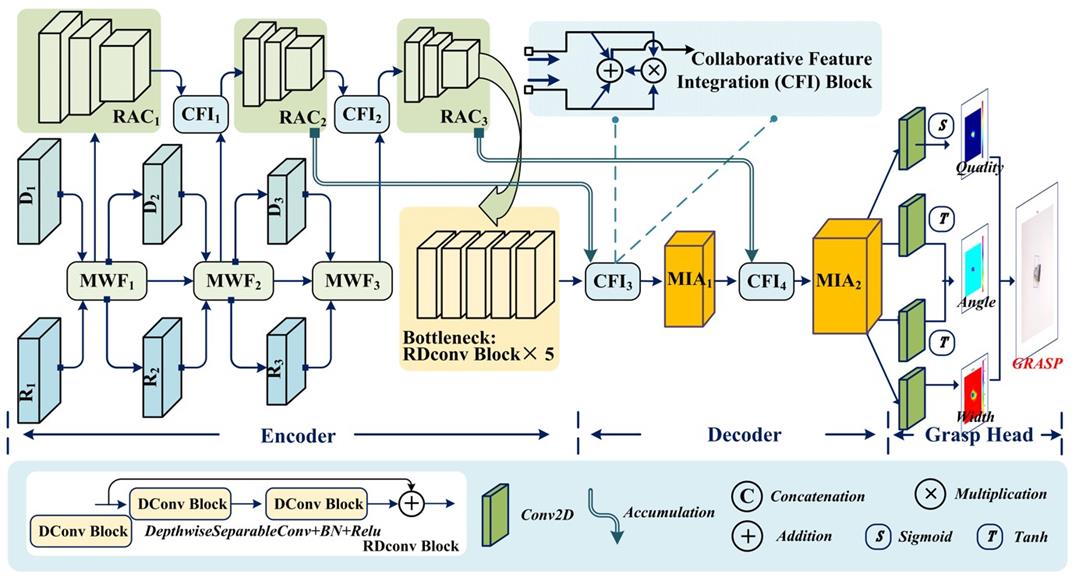

| Rotation Adaptive Grasping Estimation Network Oriented to Unknown Objects Based on Novel RGB-D Fusion Strategy | |

| This paper proposes a framework for rotation adaptive grasping estimation based on a novel RGB-D fusion strategy. Specifically, the RGB-D is fused with shared weights in stages based on the proposed Multi-step Weight-learning Fusion (MWF) strategy. The spatial position is encoding learned autonomously based on the proposed Rotation Adaptive Conjoin (RAC) encoder to achieve spatial and rotational adaptiveness oriented to unknown objects with unknown poses. In addition, the Multi-dimensional Interaction-guided Attention (MIA) decoding strategy based on the fused multiscale features is proposed to highlight the practical elements and suppress the invalid ones. The method has been validated on the Cornell and Jacquard grasping datasets with cross-validation accuracies of 99.3% and 94.6%. The single-object and multi-object scene grasping success rates on the robot platform are 95.625% and 87.5%, respectively. |

|

| Hongkun Tian, Kechen Song, et al. Rotation Adaptive Grasping Estimation Network Oriented to Unknown Objects Based on Novel RGB-D Fusion Strategy [J]. Engineering Applications of Artificial Intelligence, 2023. (paper) |

|

| Lightweight Pixel-Wise Generative Robot Grasping Detection | |

|

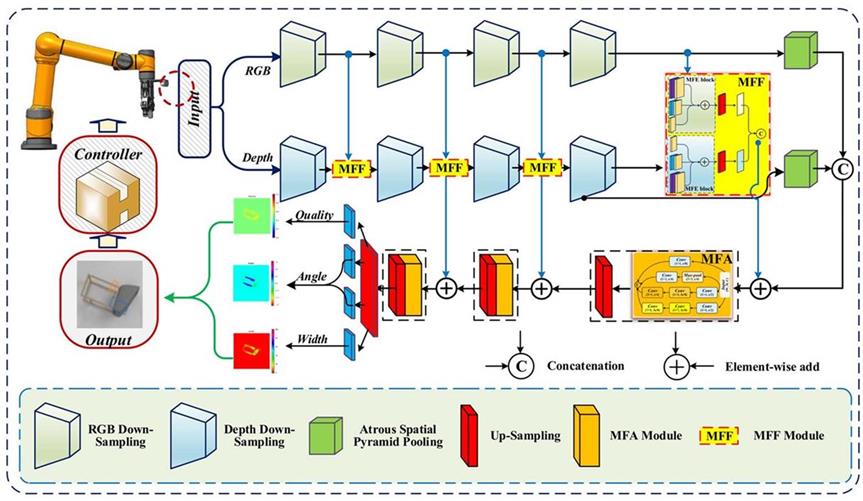

Grasping detection is one of the essential tasks for robots to achieve automation and intelligence. The existing grasp detection mainly relies on data-driven discriminative and generative strategies. Generative strategies have significant advantages over discriminative strategies in terms of efficiency. RGB and depth (RGB-D) data are widely used in grasping data sources due to the sufficient amount of information and low cost of acquisition. RGB-D fusion has shown advantages over only using RGB or depth. However, existing research has mainly focused on early fusion and late fusion, which is challenging to utilize information from both modalities fully. Improving the accuracy of grasping while leveraging the knowledge of both modalities and ensuring lightweight and real time is crucial. Therefore, this article proposes a pixel-wise RGB-D dense fusion method based on a generative strategy. The technique is doubly experimentally validated on public datasets and real robot platform. Accuracy rates of 98.9% and 94.0% are achieved on Cornell and Jacquard datasets, and the efficiency of only 15 ms is achieved for single-image processing. The average success rate of the AUBO i5 robotic platform with DH-AG-95 parallel gripper reached 94.0% for single-object scenes, 86.7% for three-object scenes, and 84% for five-object scenes. Our approach has outperformed existing state-of-the-art methods. |

| Hongkun Tian, Kechen Song, et al. Light-weight Pixel-wise Generative Robot Grasping Detection Based on RGB-D Dense Fusion [J]. IEEE Transactions on Instrumentation and Measuremente, 2022,71, 5017912. (paper) (video) |

|