Kechen Song(Associate professor)

+

- Supervisor of Doctorate Candidates Supervisor of Master's Candidates

- Name (English):Kechen Song

- E-Mail:

- Education Level:With Certificate of Graduation for Doctorate Study

- Gender:Male

- Degree:博士

- Status:Employed

- Alma Mater:东北大学

- Teacher College:机械工程与自动化学院

- Email:

Click:

Open time:..

The Last Update Time:..

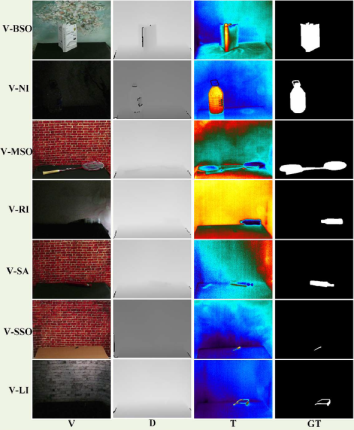

A Novel Visible-Depth-Thermal Image Dataset of Salient Object Detection

|

|

|

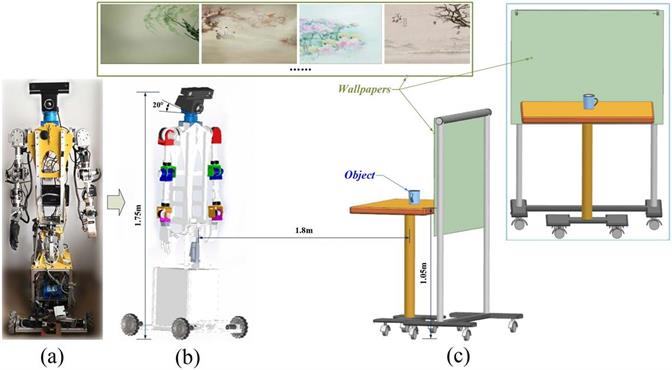

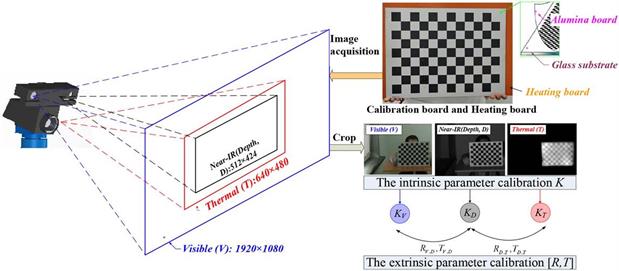

Visual perception plays an important role in industrial information field, especially in robotic grasping application. In order to detect the object to be grasped quickly and accurately, salient object detection (SOD) is employed to the above task. Although the existing SOD methods have achieved impressive performance, they still have some limitations in the complex interference environment of practical application. To better deal with the complex interference environment, a novel triple-modal images fusion strategy is proposed to implement SOD for robotic visual perception, namely visible-depth-thermal (VDT) SOD. Meanwhile, we build an image acquisition system under variable lighting scene and construct a novel benchmark dataset for VDT SOD (VDT-2048 dataset). Multiple modal images will be introduced to assist each other to highlight the salient regions. But, inevitably, interference will also be introduced. In order to achieve effective cross-modal feature fusion while suppressing information interference, a hierarchical weighted suppress interference (HWSI) method is proposed. The comprehensive experimental results prove that our method achieves better performance than the state-of-the-art methods. |

| Kechen Song, et al. A Novel Visible-Depth-Thermal Image Dataset of Salient Object Detection for Robotic Visual Perception [J]. IEEE/ASME Transactions on Mechatronics, 2022. (paper) (Dataset & Code) | |

RGB-T Image Analysis Technology and Application: A Survey

| RGB-T Image Analysis Technology and Application: A Survey | |

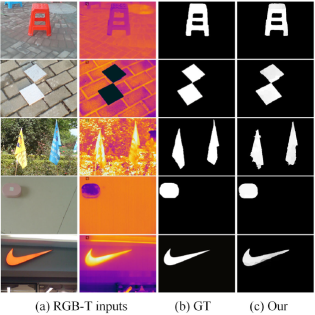

| RGB-Thermal infrared (RGB-T) image analysis has been actively studied in recent years. In the past decade, it has received wide attention and made a lot of important research progress in many applications. This paper provides a comprehensive review of RGB-T image analysis technology and application, including several hot fields: image fusion, salient object detection, semantic segmentation, pedestrian detection, object tracking, and person re-identification. The first two belong to the preprocessing technology for many computer vision tasks, and the rest belong to the application direction. This paper extensively reviews 400+ papers spanning more than 10 different application tasks. Furthermore, for each specific task, this paper comprehensively analyzes the various methods and presents the performance of the state-of-the-art methods. This paper also makes an in-deep analysis of challenges for RGB-T image analysis as well as some potential technical improvements in the future. |  |

| Kechen Song, Ying Zhao, et al. RGB-T Image Analysis Technology and Application: A Survey [J]. Engineering Applications of Artificial Intelligence, 2023, 120, 105919. (paper) | |

RGB-T Salient Object Detection

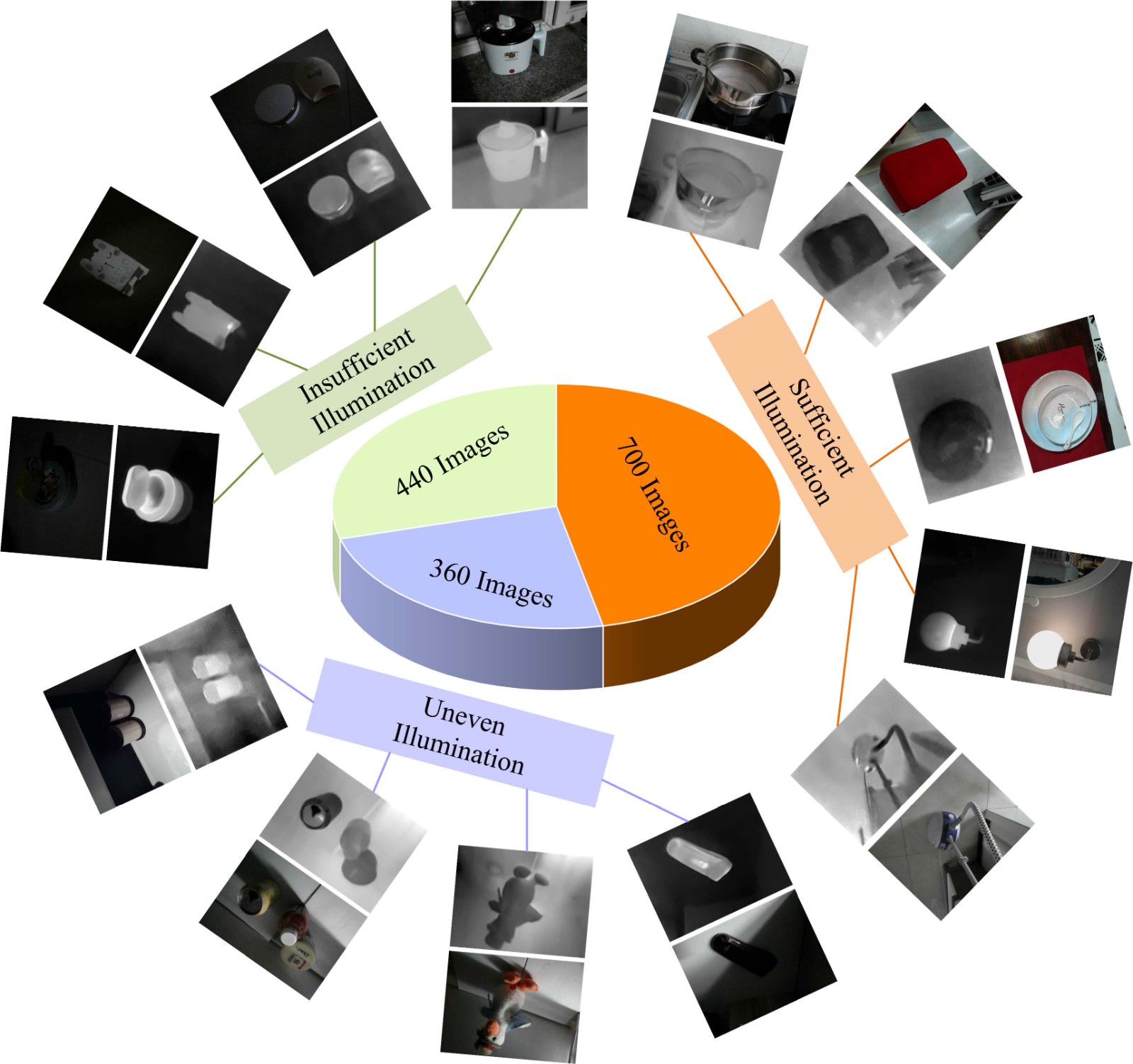

| A Variable Illumination Dataset: VI-RGBT1500 | |

|

We propose a variable illumination dataset named VI-RGBT1500 for RGBT image SOD. This is the first time that different illuminations are taken into account in the construction of the RGBT SOD dataset. Three illumination conditions, which are sufficient illumination, uneven illumination and insufficient illumination, are adopted to collect 1500 pairs of RGBT images. |

| Kechen Song, Liming Huang, et al. Multiple Graph Affinity Interactive Network and A Variable Illumination Dataset for RGBT Image Salient Object Detection [J]. IEEE Transactions on Circuits and Systems for Video Technology, 2023. (paper)(code & dataset) | |

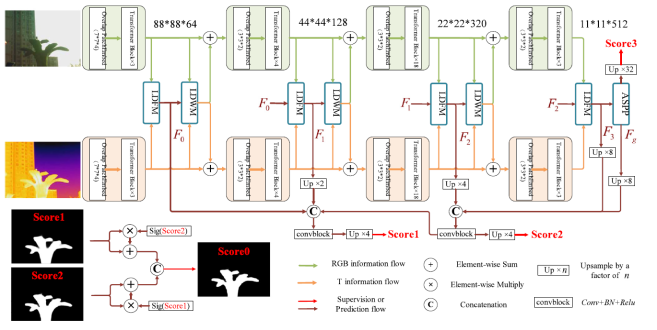

| TAGFNet: | |

| The current RGB-T datasets contain only a tiny amount of low-illumination data. The RGB-T SOD method trained based on these RGB-T datasets does not detect the salient objects in extremely low-illumination scenes very well. To improve the detection performance of low-illumination data, we can spend a lot of labor to label low-illumination data, but we tried another new idea to solve the problem by making full use of the characteristics of Thermal (T) images. Therefore, we propose a T-aware guided early fusion network for cross-illumination salient object detection. |

|

| Han Wang, Kechen Song, et al. Thermal Images-Aware Guided Early Fusion Network for Cross-Illumination RGB-T Salient Object Detection [J]. Engineering Applications of Artificial Intelligence, 2023, 118, 105640. (paper)(code) | |

| CGFNet: Cross-Guided Fusion Network for RGB-T Salient Object Detection | |

|

A novel Cross-Guided Fusion Network (CGFNet) for RGB-T salient object detection is proposed. Specifically, a Cross-Scale Alternate Guiding Fusion (CSAGF) module is proposed to mine the high-level semantic information and provide global context support. Subsequently, we design a Guidance Fusion Module (GFM) to achieve sufficient cross-modality fusion by using single modal as the main guidance and the other modal as auxiliary. Finally, the Cross-Guided Fusion Module (CGFM) is presented and serves as the main decoding block. |

| Jie Wang, Kechen Song, et al. CGFNet: Cross-Guided Fusion Network for RGB-T Salient Object Detection [J]. IEEE Transactions on Circuits and Systems for Video Technology, 2022, 32(5),2949-2961. (paper)(code)(ESI highly cited, 11/2022-1/2023) |

|

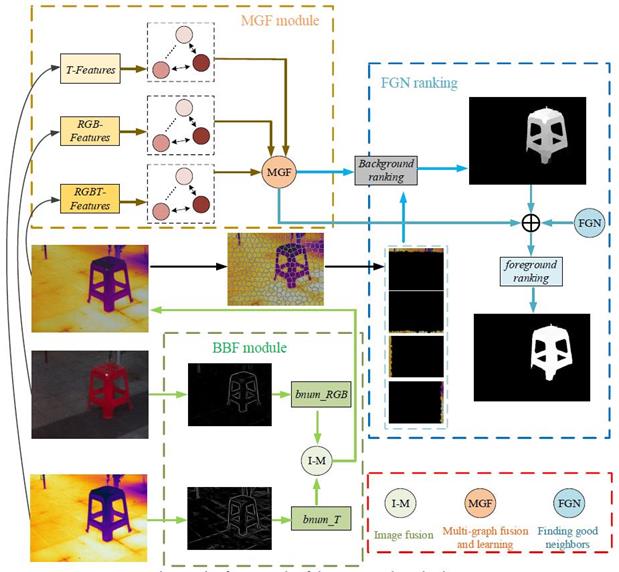

| Multi-graph Fusion and Learning for RGBT Image Saliency Detection | |

| This research presents an unsupervised RGBT saliency detection method based on multi-graph fusion and learning. Firstly, RGB images and T images are adaptively fused based on boundary information to produce more accurate superpixels. Next, a multi-graph fusion model is proposed to selectively learn useful information from multi-modal images. Finally, we implement the theory of finding good neighbors in the graph affinity and propose different algorithms for two stages of saliency ranking. Experimental results on three RGBT datasets show that the proposed method is effective compared with the state-of-the-art algorithms. |

|

| Liming Huang, Kechen Song, et al. Multi-graph Fusion and Learning for RGBT Image Saliency Detection [J]. IEEE Transactions on Circuits and Systems for Video Technology, 2022,32(3),1366-1377. (paper)(code)(ESI highly cited, 1/2023) |

|

| Unidirectional RGB-T salient object detection | |

|

The U-shaped encoder–decoder architecture based on CNNs has been rooted in salient object detection (SOD) tasks, and it have revealed two drawbacks while driving the rapid development of saliency detection. (1) The inherent characteristics of CNNs dictate that it is difficult to learn long-range dependencies and model global correlations. (2) For the common purpose of improving the performance of saliency detection, the encoder and decoder should complement each other and work together. However, the existing encoder–decoder architecture treats encoder and decoder independently of each other. Specifically, the encoder is responsible for extracting features and the decoder fuses multi-level or multi-modal features to produce prediction maps. That is, the encoder alone needs to be responsible for the decoder, while the valuable information after the decoder fusion will not facilitate feature extraction. Therefore, we propose a unidirectional RGB-T salient object detection network with intertwined driving of encoding and fusion to solve the above problems. |

| Jie Wang, Kechen Song, et al. Unidirectional RGB-T salient object detection with intertwined driving of encoding and fusion [J]. Engineering Applications of Artificial Intelligence, 2022, 114, 105162. (paper) | |

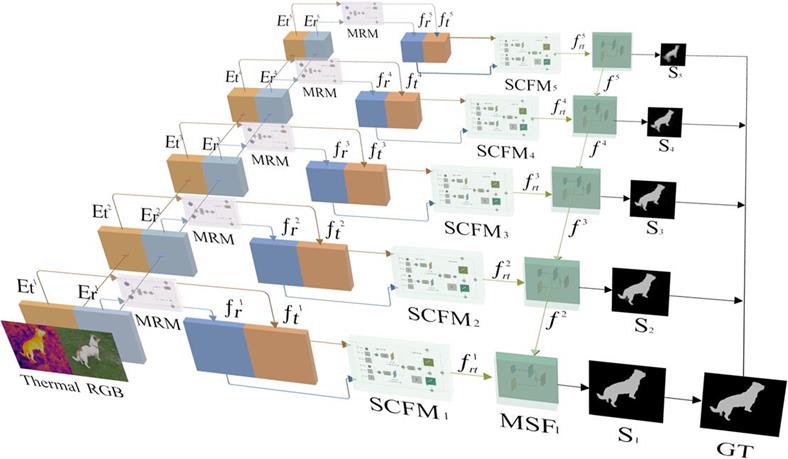

| MCFNet | |

| We propose a novel Modal Complementary Fusion Network (MCFNet) to alleviate the contamination effect of low-quality images from both global and local perspectives. Specifically, we design a modal reweight module (MRM) to evaluate the global quality of images and adaptively reweight RGB-T features by explicitly modelling interdependencies between RGB and thermal images. Furthermore, we propose a spatial complementary fusion module (SCFM) to explore the complementary local regions between RGB-T images and selectively fuse multi-modal features. Finally, multi-scale features are fused to obtain the salient detection result. |

|

| Shuai Ma, Kechen Song, et al. Modal Complementary Fusion Network for RGB-T Salient Object Detection [J]. Applied Intelligence, 2023, 53, 9038-9055. (paper) (code) | |

| Low-rank Tensor Learning and Unified Collaborative Ranking | |

|

We propose a novel RGB-T saliency detection method in this letter. To this end, we first regard superpixels as graph nodes and calculate the affinity matrix for each feature. Then, we propose a low-rank tensor learning model for the graph affinity, which can suppress redundant information and improve the relevance of similar image regions. Finally, a novel ranking algorithm is proposed to jointly obtain the optimal affinity matrix and saliency values under a unified structure. Test results on two RGB-T datasets illustrate the proposed method performs well when against the state-of-the-art algorithms. |

| Liming Huang, Kechen Song, et al. RGB-T Saliency Detection via Low-rank Tensor Learning and Unified Collaborative Ranking [J]. IEEE Signal Processing Letters, 2020, 27,1585-1589. (paper) (code and datasets) | |

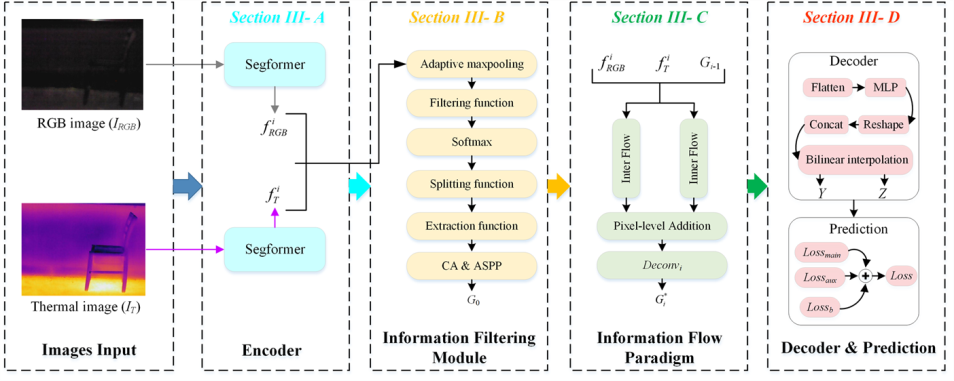

| IFFNet | |

| A novel information flow fusion network (IFFNet) method is proposed for the RGB-T cross-modal images. The proposed IFFNet consists of an information filtering module and a novel information flow paradigm. Validation on three available RGB-T salient object detection datasets shows that our proposed method performs more competitive than the state-of-the-art methods. |  |

| Kechen Song, Liming Huang, et al. A Potential Vision-Based Measurements Technology: Information Flow Fusion Detection Method Using RGB-Thermal Infrared Images [J]. IEEE Transactions on Instrumentation & Measurement, 2023, 72, 5004813. (paper) (code) | |

| GRNet: Cross-Modality Salient Object Detection Network with Universality and Anti-interference | |

|

Although cross-modality salient object detection has achieved excellent results, the current methods need to be improved in terms of universality and anti-interference. Therefore, we propose a cross-modality salient object detection network with universality and anti-interference. First, we offer a feature extraction strategy to enhance the features in the feature extraction stage. It can promote the mutual improvement of different modal information and avoid the influence of interference on the subsequent process. Then we use the graph mapping reasoning module (GMRM) to infer the high-level semantics to obtain valuable information. It enables our proposed method to accurately locate the objects in different scenes and interference to improve the universality and anti-interference of the method. Finally, we adopt a mutual guidance fusion module (MGFM), including a modality adaptive fusion module (MAFM) and across-level mutual guidance fusion module (ALMGFM), to carry out an efficient and reasonable fusion of multi-scale and multi-modality information. |

| Hongwei Wen, Kechen Song,et al. Cross-Modality Salient Object Detection Network with Universality and Anti-interference [J]. Knowledge-Based Systems, 2023, 264, 110322. (paper) (code) | |

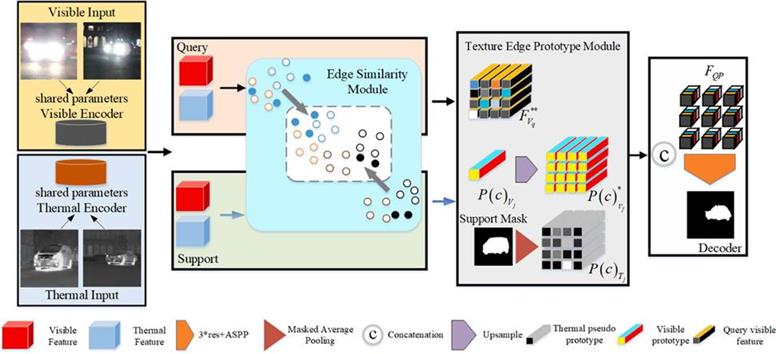

RGB-T Few-shot Semantic Segmentation

| V-TFSS | |

|

Few-shot semantic segmentation (FSS) has drawn great attention in the community of computer vision, due to its remarkable potential for segmenting novel objects with few pixel-annotated samples. However, some interference factors, such as insufficient illumination and complex background, can impose more challenge to the segmentation performance than fully-supervised when the number of samples is insufficient. Therefore, we propose the visible and thermal (V-T) few-shot semantic segmentation task, which utilize the complementary and similar information of visible and thermal images to boost few-shot segmentation performance. |

Yanqi Bao, Kechen Song, et al. Visible and Thermal Images Fusion Architecture for Few-shot Semantic Segmentation [J]. Journal of Visual Communication and Image Representation, 2021, 80, 103306. (paper)(code and datasets) |

|

RGB-T Object Tracking

| Learning Discriminative Update Adaptive Spatial-Temporal Regularized Correlation Filter for RGB-T Tracking | |

|

We propose a novel adaptive spatial-temporal regularized correlation filter model to learn an appropriate regularization for achieving robust tracking and a relative peak discriminative method for model updating to avoid the model degradation. Besides, to make better integrate the unique advantages of the two modes and adapt the changing appearance of the target, an adaptive weighting ensemble scheme and a multi-scale search mechanism are adopted, respectively. To optimize the proposed model, we designed an efficient ADMM algorithm, which greatly improved the efficiency. Extensive experiments have been carried out on two available datasets, RGBT234 and RGBT210, and the experimental results indicate that the tracker proposed by us performs favorably in both accuracy and robustness against the state-of-the-art RGB-T trackers. |

Mingzheng Feng, Kechen Song, et al. Learning Discriminative Update Adaptive Spatial-Temporal Regularized Correlation Filter for RGB-T Tracking [J]. Journal of Visual Communication and Image Representation, 2020, 72, 102881. (paper) |

|